Intelligent Retrieval-Augmented Generation (RAG)

CORE HIGHLIGHT

OBJECTIVE

Eliminate the manual “Proposal Bottleneck” by transforming static corporate archives into a context-aware, generative intelligence layer.

Introduction: Beyond Basic Automation

In high-stakes enterprise contracting, the barrier to success is often the “Knowledge Gap.” Expert teams spend thousands of hours manually digging through old PDF and Word files to find past performance records and technical specifications. Traditional AI tools often fail here because they lack specific company context, leading to generic or inaccurate “hallucinations.”

Luna AI was engineered to solve this by using LangChain as a dedicated Reasoning Layer. Instead of simply “asking a chatbot,” Luna AI uses LangChain to orchestrate a sophisticated “Institutional Memory” that retrieves facts from secure vaults to draft grounded, professional proposals.

The Architecture: LangChain as the “Cognitive Router”

To handle the nuance of legal and technical documents, we moved away from simple wrappers. We built a modular backend where LangChain acts as the central brain, coordinating the flow of data between raw files and the final AI output.

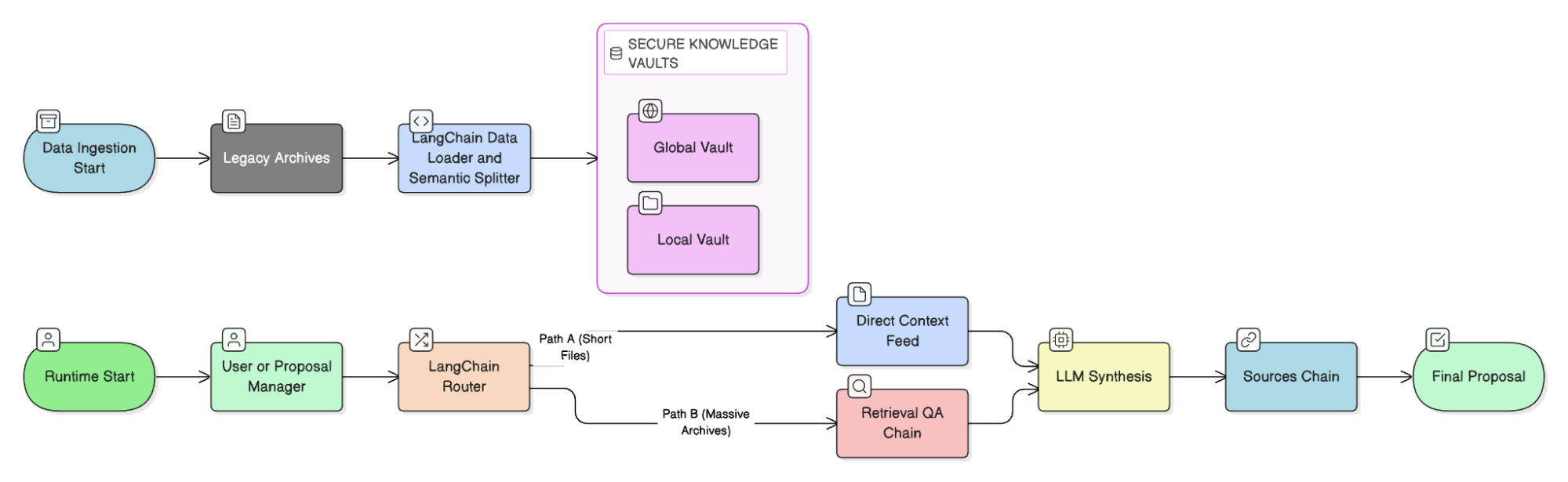

The Knowledge Ingestion Pipeline

The foundation of Luna AI is its ability to “understand” and “index” thousands of pages of history.

- The Process: We utilized LangChain’s specialized Document Loaders to scan complex layouts from PDFs and Word files.

- Smart Chunking: Using the Recursive Character Text Splitter, the system breaks documents into semantically meaningful pieces. This ensures that the AI doesn’t just see “words,” but understands the full context of a paragraph before saving it into the searchable database.

Workflow Deep Dive: The Data-to-Draft Pipeline

Adaptive Retrieval Strategy (The Efficiency Gate)

To ensure the system remains lightning-fast, we implemented a hybrid decision point managed by LangChain:

- The Short-Context Path: For smaller reference files, the system feeds the entire document directly into the AI for a fast, comprehensive response.

- The High-Volume Path: For massive archives, the system triggers the Retrieval QA Chain. This performs a surgical “semantic search,” pulling only the specific “Knowledge Nuggets” required to answer the current proposal requirement.

Secure Multi-Tenancy (Global vs. Local)

Luna AI uses LangChain to enforce strict data boundaries, which is critical for enterprise security:

- Global Knowledge: A secure vault for company-wide credentials, bios, and standard service descriptions.

- Local Knowledge: A private, project-specific vault for data that only applies to a single, current bid.

- Precision Filtering: We implemented a Custom Retriever that allows the system to filter results by specific file names, ensuring the AI only “listens” to the specific documents a user has authorized.

Traceable Authoring (The Sources Chain)

To ensure 100% factual alignment, we utilized the Retrieval QA with Sources Chain.

- The Outcome: Every generated paragraph or template response comes with a “Digital Receipt.” The system automatically cites the source document, allowing human editors to verify facts against the original PDF or Word file instantly.

Results and ROI Analysis

The integration of a LangChain-led orchestration fundamentally altered the speed and quality of the bidding process.

| Metric | Manual/Legacy Process | Luna AI (LangChain Orchestration) | Improvement |

| Research Speed | 4-8 Business Hours | < 45 Seconds | 99% Faster |

| Factual Accuracy | Variable (Human Error) | High (Fact-Grounded) | Consistent Quality |

| Authoring Effort | High (Manual Synthesis) | Zero (Autonomous Draft) | 80% Cost Reduction |

| Data Traceability | None (Source unknown) | Automatic (Source Citations) | 100% Auditability |

Conclusion: The Sovereign Knowledge Enterprise

Luna AI demonstrates that LangChain is more than a tool—it is a Contextual Reasoning Layer. By standardizing the “Load → Split → Embed → Store → Retrieve” cycle, the organization has built a system that doesn’t just write; it remembers. Luna AI turns a company’s past work into its greatest future asset, providing a production-grade blueprint for the next generation of intelligent document work.